PLLaVA: Parameter-free LLaVA Extension from Images to Videos for Video Dense Captioning

A video assistant.

With imagenary captioning ability.

See our PLLAVA in the Pool →

7b Gradio 13b Gradio 34b Gradio

A video assistant.

With imagenary captioning ability.

See our PLLAVA in the Pool →

7b Gradio 13b Gradio 34b Gradio

Some of the fantastic results are shown here. The model can produce detailed captions for the vidoes.

More on the Generated Captions. These are videos from the Inter-4k dataset. Click on the video to see the detailed captioning.

We've made all our results open-sourced, you can find all caption results on the Inter-4k dataset here. Pick a variant and slide through to have a sense on what our PLLAVA are capable of!

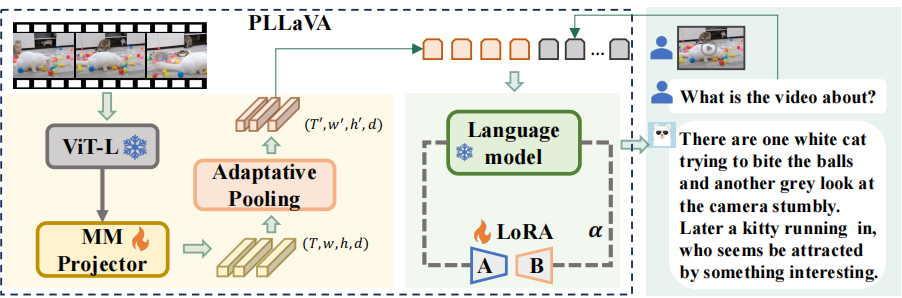

We intended to construct a video assistant that can do captioning, detailed video reasoning and so much beyond. With a simple yet powerful pooling module design. We mange to bridge a Image Finetuned Vision-LLM towards on par ability regarding the video modality

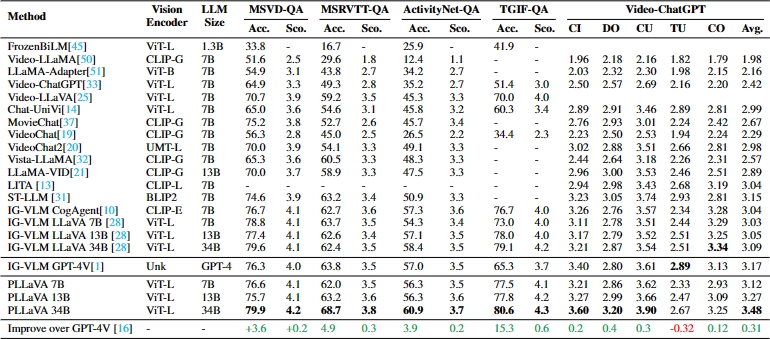

Vision-language pre-training (VLP) has significantly elevated performance across a range of vision-language applications. Yet, the pre-training process for video-related tasks demands an exceptionally high degree of computational and data resources. This paper investigates a straightforward, highly efficient, and resource-light approach to adapting an existing image-language pre-training model for video data. Our preliminary experiments reveal that directly fine-tuning pre-trained image-language models with multiple frames on video datasets leads to performance saturation or even a drop in caption-related tasks. Besides, it is also vulnerable to prompts and tends to provide short descriptions. We conducted a deep analysis and observed that the performance saturation and the vulnerability might be related to the dominant patches that exist in some single video patches. We then propose a simple pooling strategy to smooth the feature distribution along the temporal dimension and thus reduce the dominant impacts from some extreme tokens. The new model is termed Pooling LLaVA, or PLLaVA in short. With the proposed pooling strategy, we achieve new state-of-the-art performance on all evaluated datasets. Notably, on the recent popular Video ChatGPT benchmark, PLLaVA achieves a score of 3.48 out of 5 on average of five evaluated dimensions, which is the new state-of-the-art score on the leaderboard and is 0.31 higher than the previous SOTA results from GPT4V (IG-VLM). On the latest multi-choice benchmark MVBench, PLLaVA achieves 58.1% accuracy on average across 20 sub-tasks, which is the new state-of-the-art result and is 14.5% higher than GPT4V (IG-VLM).

There are two dimensions for the pooling strategy: spatial dimension and the temporal dimension. We emperically found that reducing the spatial dimension with larger temporal dimension could lead to better model perfromance, compared to reducing the temporal dimension directly.

We compare the performance of PLLAVA with recent popular methods over both question-qnswer and captioning datasets. The results are shown below.

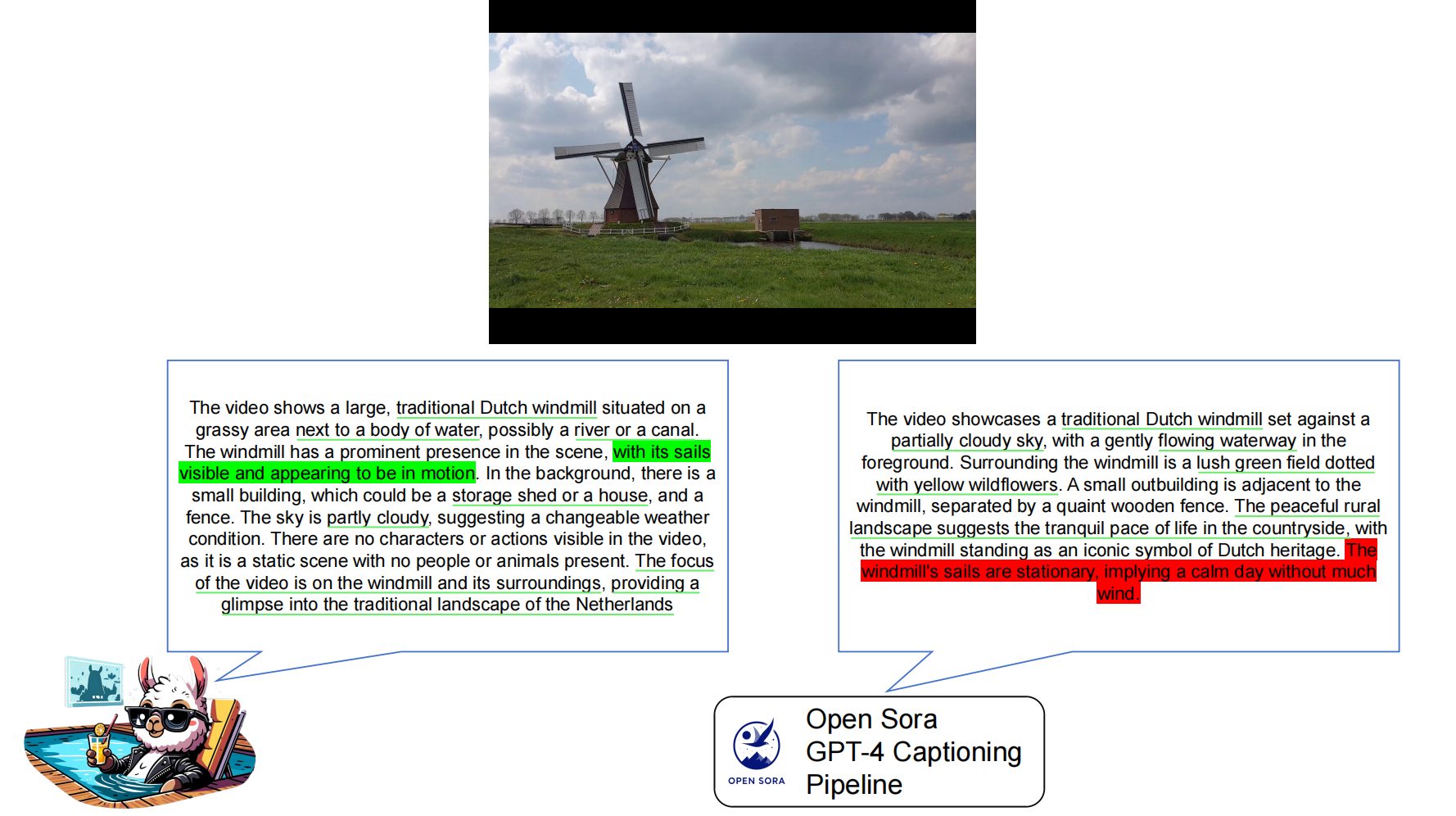

Finnally, We used PLLAVA-34B to produce caption for entire Inter-4k dataset with both English and Chinese. The dataset contains 1k high resolution video with caption generated with our best model. You can checkout the results in the gallery gradio. If you find our dataset useful. Feel free to directly donwload the released recaptioning dataset in our huggingface dataset repo. The results are demonstrated comparing to using image based models for video captioning. Using Video Large Language Model would improve on the motion description of the captioning.

We've released all the source code for PLLAVA. If you find our model powerful feel free to visit our github repo and set up your local PLLAVA and play around.

If you got any interesting idea, feel free to reach out to us!